Frontier tech to strengthen information integrity

A blog by Miranda Dixon, a member of the Frontier Tech Hub

Pilots: Online amplification networks detector and AI Literacy Lab

Misinformation and disinformation are everywhere. Over the last year, we’ve been working with around 80 members of the FCDO, spanning eight thematic areas across four continents, to interrogate the real-time concerns about harmful digital information, which are intensifying real-life threats. We found it to be intensifying real-life threats to national security, democracy, human rights, and humanitarian response.

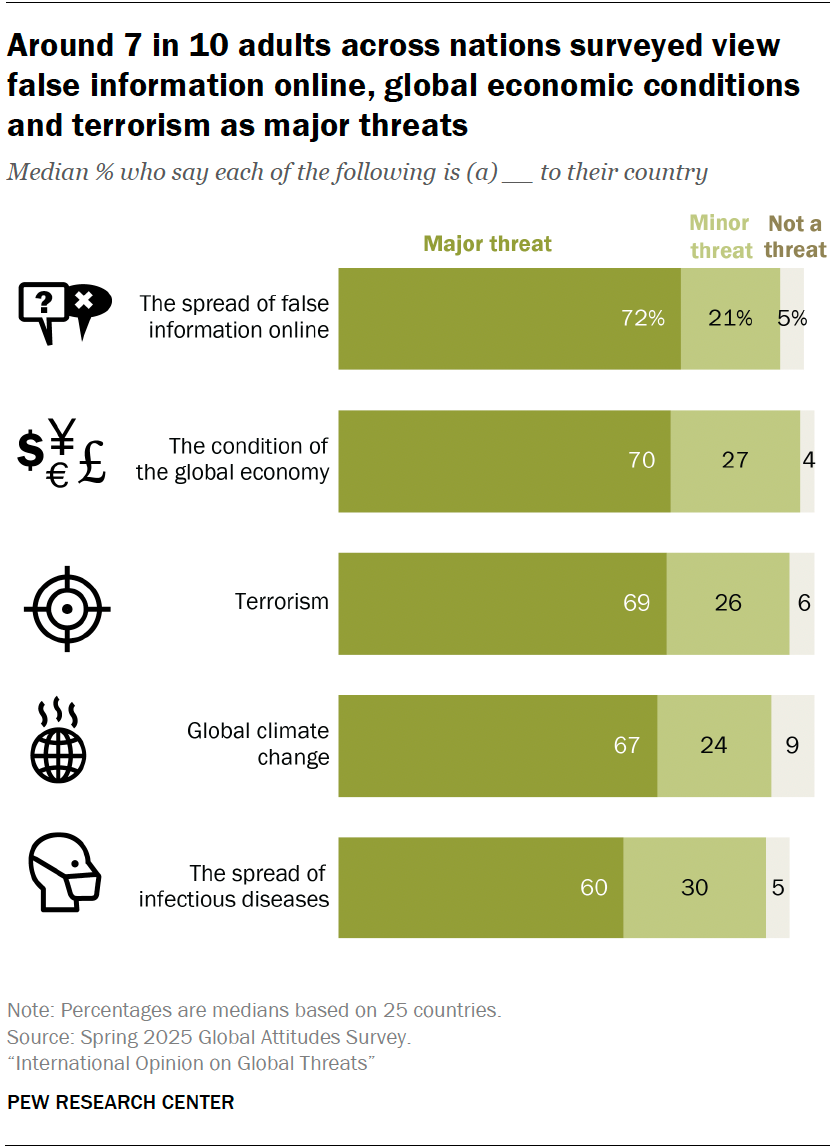

Recent developments have exacerbated these challenges. The changing nature of the funding and policy landscape creates uncertainty for many organisations operating in this area. Meta's decision to scale back its fact-checking capabilities and social media companies’ increasing employment of ‘anti-scraping’ engineers have further restricted open data research and analysis. A recent Pew Research Center survey reveals the scale of public concern: 72% of respondents across 35 countries now identify misinformation as a major threat—the highest on record, displacing climate change from its long-held position as the world's top concern.

While tech has been the great enabler of these threats, it also holds untapped potential to protect and strengthen democracy. The journey ahead is nebulous and complex, but we are at a crossroads where we must move forward to test and embed new solutions, with our eyes wide open.

Today, we’re announcing a new cohort of pilots harnessing frontier technologies to strengthen information integrity. The first two profiles have been published:

Online amplification networks detector: Can a user-friendly tool help detect hidden connections between disinformation websites and social media accounts in Bulgaria?

AI Literacy Lab: Can an AI-powered interactive learning platform effectively teach citizens in the Baltics to identify and critically evaluate deepfakes and synthetic media?

Why now? Understanding the landscape

Five years ago, Europe had little in place to prevent mis/disinformation. Today, there are laws, networks, and much greater awareness. Democratic participation is growing thanks to the wealth of digital tools. With tools like LLMs, there is real potential to scale fact-checking efforts, streamline social media investigations, and empower local actors to help uphold information integrity.

The journey is already underway within FCDO and beyond in tackling misinformation and disinformation, supporting human rights, rolling back rights, and developing the capacity of CSOs to build information integrity.

But alongside these measures, it’s important to explore an approach that acknowledges a complex reality: it may ultimately be impossible to prevent damaging content from being published, particularly given the proliferation of AI-generated content. Between 2022 and 2024, the number of documented disinformation campaigns in Africa alone quadrupled.

With this in mind, we have been exploring ways to increase information integrity. This involves better equipping civil society organisations (CSOs) to identify and mitigate information threats and upskilling communities to think critically about the information they consume.

A growing issue worldwide, we are starting in Eastern Europe. Like many places across the globe, Information threats are intertwined with geopolitics, civic space, and democratic resilience. Many CSOs have told us they have the insight, evidence, and networks to respond to emerging threats, but lack access to the technology, expertise, and data infrastructure needed to keep pace with the speed and sophistication of malign actors and changing technology.

“Mushroom” websites refer to coordinated, semi-automated networks of websites and social media accounts that generate, disseminate, and amplify disinformation. These are increasingly shaping the information ecosystem in Bulgaria and the Western Balkans.

Putting tools in the hands of civic space defenders

Effective tools are mostly inaccessible to non-technical users. When you see efforts to debunk or defuse the impacts of mis/disinformation, there’s almost always someone with specialist digital skills and social monitoring technologies behind it.

These skills primarily reside in a newly emerging community of fact-checkers, investigative journalists, tech accountability campaigners, digital human rights organisations and pro-democracy groups. The community has been hit hard by funding cuts and policy shifts at tech and social media companies outlined above.

As the ‘worlds’ of cyber security and democratic integrity come together, there is an opportunity to provide a protected space for experimentation. This is where teams can step out of crisis mode and experiment with AI tools in a low-risk, high-support environment.

From this starting point, we’ve surfaced a set of promising ideas that show how AI can strengthen information integrity, which will now move into the pilot stage—from AI training hubs in the Baltics to tools that detect coordinated amplification networks.

What comes next

The challenge is enormous, but the energy, innovation, and resilience coming from CSOs across the region are even greater—huge thanks to the collaborators who have been part of this work.

Together, we're building a more joined-up pipeline of support for CSOs who are defending civic space every day. With the right support, these organisations can help rebuild an information environment that is safer, more transparent, and more democratic, one idea at a time.

If you’d like to dig in further…

🚀 Explore the new pilot pages: Online amplification networks detector and AI Literacy Lab.

📚 How will AI influence diplomacy in 2034?

🎧 Listen to the podcast: What did we think elections would look like in 2025?