Building AI tools in a year that felt like ten: Technical learnings from DevExplorer

A blog by Olivier Mills of Baobab Tech, a Frontier Tech Implementing Partner

Pilot: Using LLMs as a tool for international development professionals

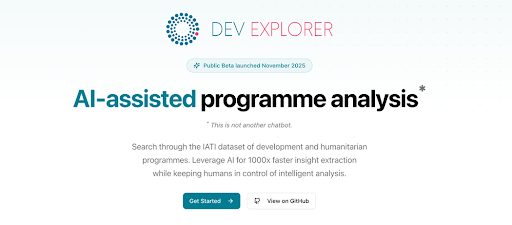

Over the past year, we've been building DevExplorer, an LLM-powered tool to help international development professionals extract insights from International Aid Transparency Initiative (IATI) data. In that time, the underlying technology shifted so dramatically that we rebuilt the tool three times. This post shares what we learned about building AI tools during a period of significant change.

The ground kept moving

A year in AI development feels like a decade elsewhere. The cost of using large language models dropped by 99.5% over twelve months. The Vercel AI SDK, which we use for our interface, went from version two to version five. New capabilities emerged constantly: better context windows, more efficient embeddings, improved agentic architectures.

We made a deliberate choice to rebuild DevExplorer from scratch multiple times. Each rebuild let us leverage new capabilities. We can now swap language models with two lines of code, which means we can experiment rapidly and adopt better and smaller models as they emerge. This flexibility proved essential in the discovery and learning process. A tool built to "finished" specifications in month three would have been obsolete by month six.

Piloting AI requires a different mindset

Traditional pilots often ask: does this tool work, and can it scale? With AI tools, that framing misses the point. The question becomes: how do we set ourselves up to continuously evolve a product so it remains useful as the technology advances? An important part of this is transparency of how AI is being used. We have fully documented this and made it available here.

This mindset shift also has practical implications for how we approached development. We didn't try to nail down detailed specifications upfront. Instead, we built quickly, showed what was possible, and iterated based on what we learned. The goal was exploring new ways of working with advanced technology, not validating a fixed design. The main evolution came with how LLMs evolved with tool calling (i.e. what some call “agentic” flows) and it’s ability to manage larger context windows (how much the LLM can look at and understand in one shot).

We also learned that introducing AI tools requires more than technical integration. It requires organizations to change their workflows. The value of DevExplorer comes from enabling rapid, iterative analysis. A user can extract insights from 200 programmes in minutes, see something interesting, and immediately run a different analysis. This high iterability changes how people approach their work. But organizations need to be ready to adopt new ways of working, which is a separate challenge from adopting new technology.

Designing for exploration

The shift to iterative analysis shaped how we designed the interface. Traditional dashboards present fixed views of data. DevExplorer needed to support a more dynamic workflow where each analysis informs the next.

Our recipe-based framework, described in a previous post, addresses this by guiding users through building collections of relevant programmes, generating intermediate outputs like customized summaries, and producing final knowledge products. But the real insight was that users don't always know what they want until they see what's possible. Design needs to accommodate that exploration.

Getting the basics right

As more teams discover what DevExplorer can do, we’ve seen growing demand for variants of our initial use case. Some of these can be addressed with DevExplorer as it is, often straightforward, though occasionally requiring users to do a bit of experimentation. Others, however, call for adjustments to the UI or underlying processes. There are two interesting lessons here though 1) seeing and using DevExplorer has helped teams articulate their requirements more clearly. 2) When you get the fundamentals right - e.g. UI, search, and document interrogation – it’s possible to build on top to support dozens of diverse use cases.

Our work depends on the broader IATI ecosystem. The richest insights come from the documents linked in IATI records, such as evaluations, annual reviews, and business cases. Making those documents more accessible and AI-ready is a priority. We're collaborating with IATI to explore how their systems can better support AI-powered analysis, which aligns with their strategic direction.

What comes next

We're also exploring the development of data connectors so organizations can integrate DevExplorer's logic and data into their existing internal systems. Not everyone wants a public cloud-based interface, and the value shouldn't be locked into a single tool.

On the technical side, we're exploring two directions. First, we want to go deeper on specific document types. Evaluations, for instance, contain rich evidence about what works. Extracting evidence gaps and synthesizing lessons across evaluations requires more specialized, granular approaches than general-purpose analysis.

Second, we're looking at frugal AI. While we use large foundational models for instruction-following and synthesis, smaller fine-tuned models can handle classification, segmentation, and domain-specific extraction more efficiently. A model trained specifically on the language patterns in IATI documentation, covering sectors, thematic areas, lessons learned, and recommendations, could handle much of the workflow without requiring expensive calls to large models. This approach could significantly reduce costs while improving accuracy on specialized tasks.

The broader opportunity

DevExplorer started as an experiment to see whether LLMs could help development professionals access knowledge more effectively. A year later, we're more convinced than ever that this technology will transform how the sector works with information. The challenge now is building systems that can evolve as fast as the technology does, while helping organizations adapt their workflows to take advantage of new capabilities. To start helping with this, we have published the code repository here on GitHub for anyone to use. We will keep updating it and are open to contributions.

If you’d like to dig in further…

🚀 Explore this pilot’s profile page Using LLMs as a tool for International Development professionals — Frontier Tech Hub

📚 What does AI mean for data analysts in government? Read our latest blog.

📝 Our AI:AI learning journey explores AI's value, barriers and ethics in development.

Publish date: 23/01/2026

Image credit: Beckett LeClair / https://betterimagesofai.org / https://creativecommons.org/licenses/by/4.0/

DevExplorer image credit: Olivier Mills