How AI can detect faults in broken WASH infrastructure

A blog by the Frontier Tech Hub

Pilot: Integrating AI into the asset management of rural water supply schemes in Nepal

Across Nepal, major progress has been made towards universal access to water and sanitation. Yet behind these headline gains lies a quieter challenge: keeping water infrastructure functioning. While 95% of households now have access to improved water sources, only around a quarter of water supply schemes are fully operational at any given time. A central reason is poor-quality data. The National WASH Information Management System (NWASH) contains records for over one million water assets, but an estimated two-thirds of those records contain inaccuracies about asset condition. This makes it difficult for government at all levels to prioritise repairs, plan maintenance budgets, and ensure communities receive safe and reliable water.

This pilot, led by the Frontier Tech Hub in collaboration with the Government of Nepal, set out to explore whether artificial intelligence could help. The core idea was simple but ambitious: could machine learning analyse the photographs already uploaded to NWASH and support faster, more accurate validation of asset data? Today, engineers manually review photos and text entries to check whether taps, reservoirs and other assets are functioning or damaged. This process is accurate but slow, labour-intensive, and impossible to scale to the full dataset. The pilot did not aim to replace enumerators or fix upstream data collection challenges, but instead focused on whether AI could act as a practical support to existing validation workflows.

Three key questions guided the work:

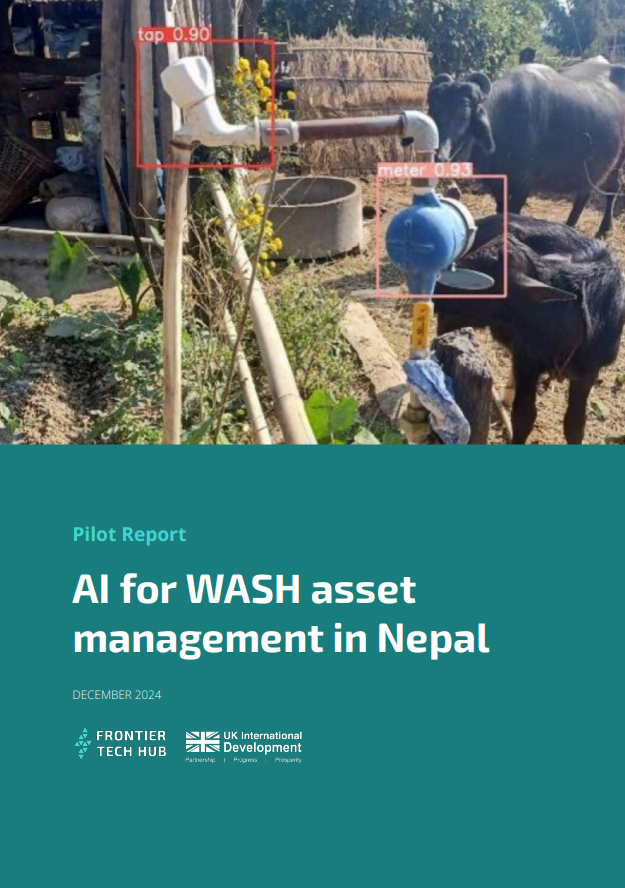

First, can an AI model reliably identify WASH assets and their components, such as taps, meters, platforms and reservoir tanks, from photographs?

Second, can it detect common faults, like missing meters or broken taps, at a level of accuracy comparable to trained engineers?

Third, could such a system be used in practice by government teams, improving efficiency without introducing unacceptable risks or errors?

To answer these questions, the team trained and tested object detection and fault detection models using real NWASH data, iterating closely with government counterparts.

The first major finding was that AI can identify most WASH assets and components with accuracy comparable to human validators. Across more than 1,200 tap images, the model detected taps, meters and concrete posts with over 95% accuracy in most cases. Performance was weaker for platforms, largely due to inconsistent definitions and poor-quality photographs, but even here the model rarely produced false positives. Importantly, errors were usually traceable to issues such as obstructed views or unusual asset designs. This finding suggests that better photo guidelines and continued training could significantly improve results.

The second finding was that AI can detect common faults efficiently and at near-human accuracy. The model performed particularly well at identifying missing or broken taps, absent meters, and incorrect support posts, matching or exceeding 95% accuracy across the full dataset. Corrosion proved harder to detect reliably, especially when damage was minor, highlighting the limits of image-based analysis alone. Nonetheless, the results showed that AI could flag the vast majority of serious faults quickly, enabling human validators to focus their attention where it matters most.

A third, more exploratory finding was that AI can estimate asset dimensions, such as tap height or reservoir size, and flag potential issues that are not routinely checked today. While these estimates were not precise enough to automate decisions, they were accurate enough to act as prompts for further review. This opens up new possibilities for identifying design flaws or data entry errors, such as reservoirs recorded with implausible capacities, that would otherwise go unnoticed.

Taken together, the pilot’s conclusion is not that AI should replace human judgement, but that it can meaningfully augment it. The report details how a “co-pilot” approach can dramatically reduce validation time and cost while maintaining data quality. In this hybrid process, AI flags likely issues, and human validators review and confirm changes. Follow-on work integrating the tool into NWASH has already shown validation delays reduced by 95% and operational costs cut by 60%. For governments grappling with ageing infrastructure and limited capacity, this work offers a grounded example of how AI can be deployed not as a silver bullet, but as a practical tool to strengthen public systems at scale.

To read the report in full, click below:

If you’d like to dig in further…